AI has been the stock market darling ever since OpenAI launched ChatGPT in November 2022. It has helped the Magnificent Seven largest US technology companies deliver big returns that have left most other businesses in their dust.

This AI wave has required a lot of computing power to teach large language models about our world and to refine how they answer prompts from users. To provide that computing horsepower, a massive amount of money has been poured into building data centres that can house stacks of high-performance graphics processors, like those made famous by chip designer Nvidia. These chips don’t come cheap. In the third quarter alone, almost $31 billion was spent on data centre chips. Often, companies are spending more in a single quarter than they used to spend on capital expenditure for a whole year pre-2020.

And then there’s the electricity needed to make them go. US bank Morgan Stanley estimates that global data centre power demand jumped from roughly 15 Terawatt-hours (TWh) to closer to 46TWh in 2024 alone. Another US bank, Wells Fargo, thinks electricity supplied to US data centres could be 16% of the current US total demand. Just last week, US President Donald Trump highlighted the ‘Stargate’ AI infrastructure investment joint venture between Japanese tech investor SoftBank, Emirati government investment arm MGX and US companies Oracle and OpenAI. While having headline funding of $500bn over five years, there was apparently no government money behind it.

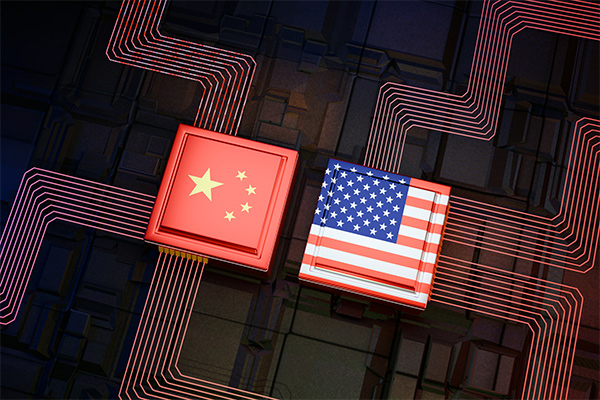

The sheer cost of investment for AI caused a flurry of worry at the tail end of last year, but it was swiftly brushed off and most AI companies have continued to forge higher. Over the weekend, those concerns returned on news that a Chinese competitor, DeepSeek, has managed to develop a large language model for a fraction of the price.

DeepSeek published an academic paper setting out how its model can learn and improve itself without needing human supervision. It purportedly trained a model of comparable size to the giants of Silicon Valley using just 2,048 Nvidia H800 chips and roughly $5.6m. While a powerful chip in absolute terms, the H800 was released in early 2023 as an intentionally inferior product specifically for the Chinese market to comply with a US ban on top-range AI chip exports to China. It was about half the power of the version sold in the West. A chip made in March 2023 is ancient in AI terms: it has been superseded several times. The latest Nvidia GB200 Superchip is 30 times more powerful than the H100, of which the H800 was a handicapped version.

If DeepSeek can create a large language model with a fraction of the investment required by its American rivals, it raises questions about whether their competitive position is as unassailable as most imagine and also brings some doubt about whether assumed trends on power consumption and investment will play out as forecast. This has led to substantial drops in US AI companies ahead of the market open on Monday.

The market’s knee-jerk reaction takes this threat at face value; however, there are reasons to be sceptical. For example, China has claimed in the past to have cracked ‘extreme ultraviolet lithography’ which is the method required for manufacturing top-end semiconductor chips. That turned out to be false. Is it really conceivable that China has now managed to achieve on a shoestring budget what the leading US technology companies have had to spend billions on? US investment bank JP Morgan, for example, thinks this is highly improbable. And it’s also worth noting that the DeepSeek offering would appear not to be sufficiently accurate for proper commercial use. Moreover, it is almost certain that western companies and governments would be reluctant to use a Chinese solution owing to concerns about security.

It does feel as though a lot of investors have been looking for an excuse to take profits on, or bet against, the chip designers and makers after a very strong run. Even so, there’s a sufficient threat to US AI superiority to prompt some robust answers to these capital investment concerns, which, as we mentioned earlier, are not new.